Modular Arithmatic

Some competitive programming techniques related to Modular Arithmatic.

First off, some important identities about the modulo operator:

(a mod m)+(b mod m) mod m=a+b mod m

(a mod m)−(b mod m) mod m=a−b mod m

(a mod m)⋅(b mod m) mod m=a⋅b mod m

These identities have the very important consequence in that you generally don't need to ever store the "true" values of the large numbers you're working with, only their residues mod m

. You can then add, subtract, and multiply with them as much as you need for your problem, taking the modulo as often as needed to avoid integer overflow. You may even decide to wrap them into their own object class with overloaded operators if your language supports them, though you may have to be careful of any object allocation overhead. If you use Kotlin like I do, consider using the inline class feature.

But what about division and fractions? That's slightly more complicated, and requires a concept called the "modular multiplicative inverse". The modular multiplicative inverse of a number a

is the number a−1

such that a⋅a−1 mod m=1

. You may notice that this is similar to the concept of a reciprocal, but here we don't want a fraction; we want an integer, specifically an integer between 0

and m−1

inclusive.

But how do you actually find such a number? Bruteforcing all numbers to a prime number close to a billion will usually cause you to exceed the time limit. There are two faster ways to calculate the inverse: the extended GCD algorithm, and Fermat's little theorem. Though the extended GCD algorithm is more versatile and sometimes slightly faster, the Fermat's little theorem method is more popular, simply because it's almost "free" once you implement exponentiation, which is also often a useful operation in itself, so that's what we'll cover here.

Fermat's little theorem says that as long as the modulus m

is a prime number (109+7

is prime, and so is 998 244 353

, another common modulus in these problems), then am m=a mod m

. Working backwards, am−1 mod m=1=a⋅am−2 mod m

, therefore the number we need is am−2 mod m

.

Note that this only works for a mod m≠0

, because there is no number x

such that 0⋅x mod m=1

. In other words, you still can't divide by 0

, sorry.

Multiplying m−2

times would still take too long; therefore a trick known as exponentiation by squaring is needed. It's based on the observation that for positive integer n

, that if n

is odd, xn=x(x2)n-1⁄2

, while if n

is even, xn=(x2)n⁄2

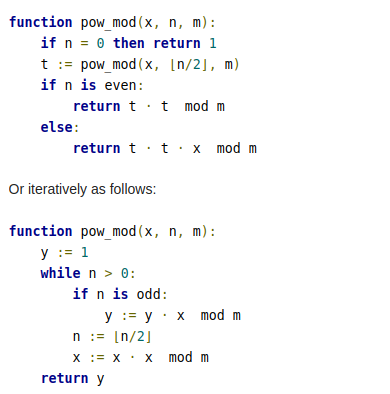

. It can be implemented recursively by the following pseudocode:

.

Now that you know how to compute the modular multiplicative inverse (to refresh, a−1=am−2 mod m

when m

is prime), you can now define the division operator:

a/b mod m=a⋅b−1 mod m

This also extends the mod

operator to rational numbers (i.e. fractions), as long as the denominator is coprime to m

. (Thus the reason for choosing a fairly large prime; that way puzzle writers can avoid denominators with m

as a factor). The four basic operations, as well as exponentiation, will still work on them as usual. Again, you generally never need to store the fractions as their "true" values, only their residues modulo m

.

Congratulations! You have now mastered Z⁄pZ

field arithmetic! A "field" is just a fancy term from abstract algebra theory for a set with the four basic operators (addition, subtraction, multiplication, division) defined in a way that works just like you've learned in high-school for the rational and real numbers (however division by zero is still undefined), and Z⁄pZ

is just a fancy term meaning the set of integers from 0

to p−1

treated as residues modulo p

.

This also means that algebra works much like the way you learned in high school. How to solve (3=4x+5 mod 109+7

)? Simply pretend that x

is a real number and get (x=−1/2 mod 109+7=500000003)

. (Technically, all x

whose residue is 500000003

, including rationals, will satisfy the equation.)

You can also now take advantage of combinatoric identities, like (nk)= n!⁄k!(n−k)!

. The factorials can be too big to store in their true form, but you can store their modular residues instead, then use modular multiplicative inverse to do the "division".

There are only a few things you need to be careful of, like:

Permutation

Some competitive programming techniques related to Permutation.

Longest increasing subsequences and Erdos Szekeres theorem

An increasing subsequence of an array a

(not necessarily a permutation) is a sequence of indices i1< i2<⋯< ik

such that ai1< ai2<⋯< aik

. Decreasing subsequences are defined similarly.

An algorithm to find a longest increasing (or, with a few modifications, non-decreasing) subsequence of an array can be found in this nice video tutorial. But that is not what we are concerned with at the moment.

We are concerned with bounds on the length of any longest increasing subsequence (LIS from here on). However, for decreasing subsequences, an LIS has length 1

. The Erdos Szekeres theorem tells us that in such cases, the length of the longest decreasing subsequence will be large.

More formally, the theorem states that in any permutation (or array with distinct elements) of size at least xy+1

, there is either an increasing subsequence of length x+1

or a decreasing subsequence of length y+1.

The easiest way to prove this theorem is via an application of the Pigeonhole principle.

Suppose for the sake of contradiction that the theorem is false for some permutation a

. For every i

, consider the length of a longest increasing subsequence ending at index i

and the length of a longest decreasing subsequence ending at index i

. Let's call these numbers xi

and yi

. Note that all xi

-s are integers between 1

and x

, and all yi

-s are integers between 1

and y

. So there can be at most xy

distinct pairs (xi,yi)

. By the Pigeonhole principle, there are i< j

such that (xi,yi)=(xj,yj)

. Since all elements are distinct, ai < aj

or ai>aj

. In the first case, it is impossible that xi=xj

, and in the latter, it is impossible that yi=yj

. This is a contradiction, and we are done.

A more sophisticated and deeper proof of this theorem can be done using Dilworth's theorem. This blog uses it to prove a special case of this theorem, though the proof can be modified easily to work for the complete proof too.

Next permutation

Note that permutations are just sequences of integers, so it is possible to sort the set of all possible sequences of size n

lexicographically (i.e., in the order you would find words in a dictionary). This defines a natural indexing of each permutation. How do we find the next permutation from a given permutation?

The easiest way (in C++) is to use std::next_permutation, but we'll briefly describe how it works.

Let's find the first index i

from the right so that ai>ai−1

. Since i

was the first index satisfying this condition, all indices i

to n

must form a decreasing sequence. Note that the smallest number in this sequence that is larger than ai

will be the new element at position i

, and the rest of them (along with the current ai

) will be sorted in increasing order after it. All of this can be implemented in time O(n−i+1)

.

Note that starting from the lexicographically smallest permutation (which is [1,2,…,n]

), the number of permutations between (both inclusive) this permutation and a permutation whose first position i

such that ai≠i

is k

, is at least (n−k)!+1

. This means that if you apply next_permutation even a large number of times, the number of elements in the permutation that will ever change will not be large (unless you start from a specific permutation, and even in that case, apart from a single change for potentially all indices, the same conclusion holds).

So if for a permutation of length n

, you do the next_permutation operation (as implemented above) O(nk)

times, the time taken will be (much faster than) O(knklogn)

. You can even bound the number of operations to perform next_permutation r

times by O(r+n)

. The analysis is similar to the complexity analysis when you increment the binary representation of an n

-bit number r

times.

follow this link for more : Blog

Segment Tree

Some competitive programming techniques related to Segment Tree.

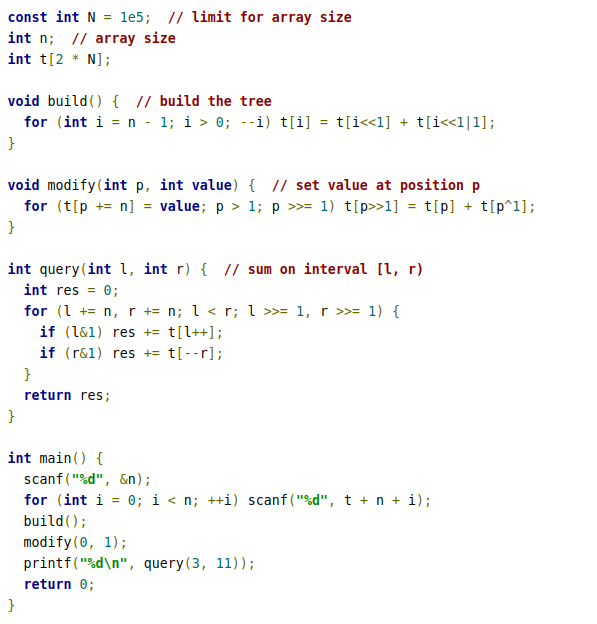

That's it! Fully operational example. Forget about those cumbersome recursive functions with 5 arguments!

Now let's see why this works, and works very efficient.

- As you could notice from the picture, leaves are stored in continuous nodes with indices starting with n, element with index i corresponds to a node with index i+n. So we can read initial values directly into the tree where they belong.

- Before doing any queries we need to build the tree, which is quite straightforward and takes O(n) time. Since parent always has index less than its children, we just process all the internal nodes in decreasing order. In case you're confused by bit operations, the code in build() is equivalent to t[i] = t[2*i] + t[2*i+1].

- Modifying an element is also quite straightforward and takes time proportional to the height of the tree, which is O(log(n)). We only need to update values in the parents of given node. So we just go up the tree knowing that parent of node p is p/2 or p>>1, which means the same. p^1 turns 2*i into 2*i+1 and vice versa, so it represents the second child of p's parent.

- Finding the sum also works in O(log(n)) time. To better understand it's logic you can go through example with interval [3,11) and verify that result is composed exactly of values in nodes 19, 26, 12 and 5 (in that order).

- General idea is the following. If l, the left interval border, is odd (which is equivalent to l&1) then l is the right child of its parent. Then our interval includes node l but doesn't include it's parent. So we add t[l] and move to the right of l's parent by setting l=(l+1)/2. If l is even, it is the left child, and the interval includes its parent as well (unless the right border interferes), so we just move to it by setting l=l/2. Similar argumentation is applied to the right border. We stop once borders meet. No recursion and no additional computations like finding the middle of the interval are involved, we just go through all the nodes we need, so this is very efficient.